SPO

Object

SPO is an innovative framework that automates prompt engineering for large language models (LLMs) through a self-supervised approach. It eliminates the need for external references or human feedback while significantly reducing optimization costs.

Features

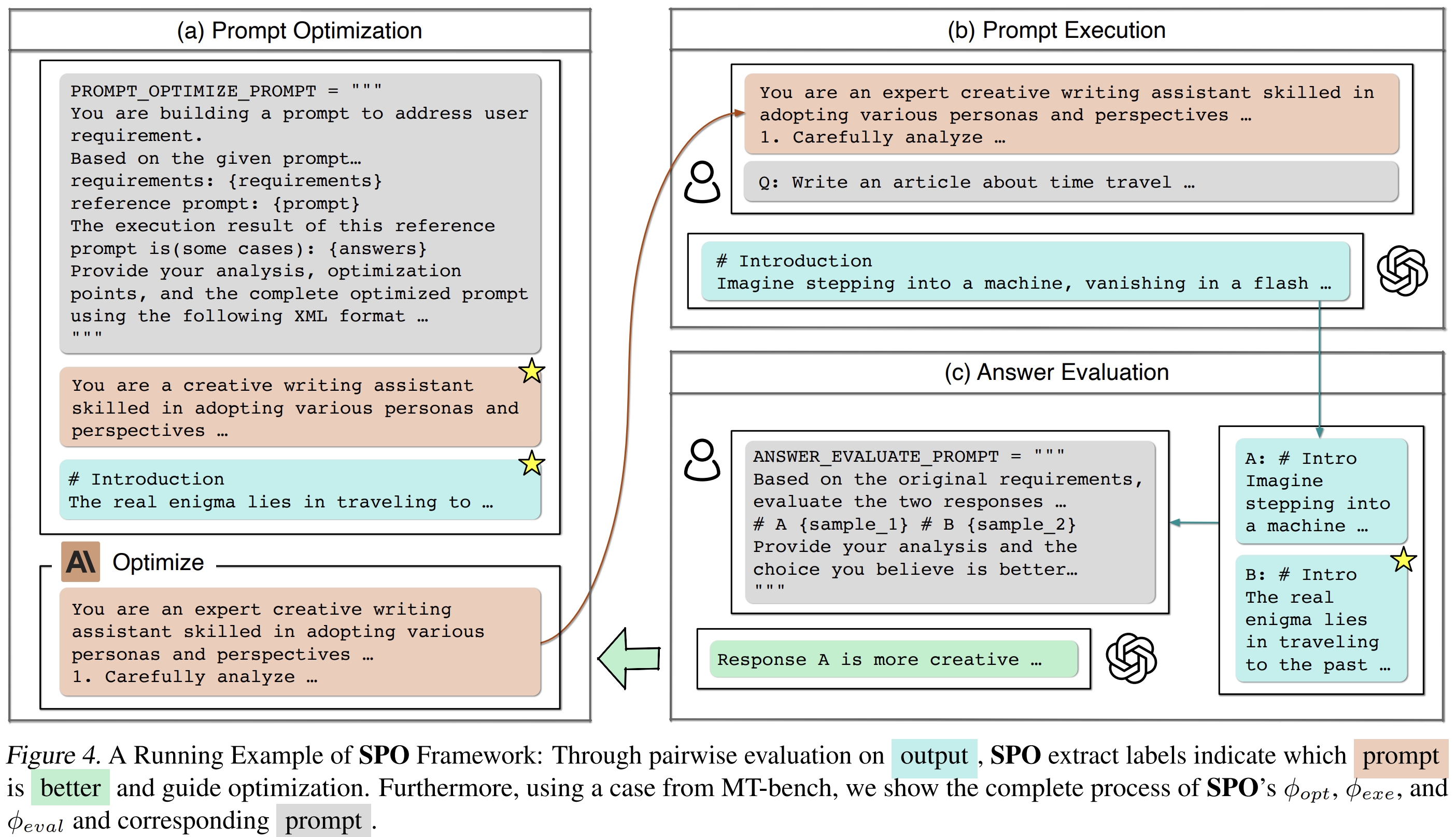

- Self-supervised optimization process using a three-phase cycle: execution, evaluation, and optimization

- Pairwise comparison mechanism that assesses relative quality of outputs from different prompts

- Zero-supervision design that requires no ground truth or human feedback

- Cost-efficient framework achieving 17.8-90.9× higher efficiency than conventional methods

- Support for both closed and open-ended tasks

- Flexible deployment options via Python script, command line, or user-friendly web interface

- Configurable LLM settings for optimization, evaluation, and execution phases

- Comprehensive logging and result tracking system

Outcome

SPO outperforms state-of-the-art prompt optimization methods while requiring only 1.1% to 5.6% of their costs, making advanced prompt engineering accessible to a broader audience. The framework automatically discovers effective prompts that enhance LLM reasoning capabilities and align outputs with task requirements across diverse domains, enabling significant performance improvements with minimal samples (as few as three) and without external references.